Marc Levoy

Note:

I retired from Stanford in 2015. Being Emeritus, I am not accepting new PhD

students, postdocs, visiting scholars, or interns. That said, I visit

Stanford semi-regularly, and am happy to meet with students or other members of

the university community by appointment. I can also serve on PhD committees if

there is a reasonable subject fit.

Biographical sketch

Marc Levoy is the VMware Founders Professor of Computer Science (Emeritus) at

Stanford University and a Vice President and Fellow at Adobe. In previous lives

he worked on computer-assisted cartoon animation (1970s), volume rendering

(1980s), 3D scanning (1990s), light field imaging (2000s), and computational

photography (2010s). At Stanford he taught computer graphics, digital

photography, and the science of art. At Google he launched Street View,

co-designed the library book scanner, and led the team that created HDR+,

Portrait Mode, and Night Sight for Pixel smartphones. These phones won

DPReview's Innovation of the Year (2017 and 2018) and Smartphone Camera of the

Year (2019), and Mobile World Congress's Disruptive Innovation Award

(2019). Levoy's awards include Cornell University's Charles Goodwin Sands Medal

for best undergraduate thesis (1976) and the ACM SIGGRAPH Computer Graphics

Achievement Award (1996). He is an ACM Fellow (2007) and member of the National

Academy of Engineering (2022).

My Stanford career

Selected highlights

(If you don't see 8 images, open your browser window wider!)

Links to the rest

- List of my publications

(through 2019 at least,

with pictures, abstracts, and links to papers)

- Research at Stanford

(through 2009, text-only, with links)

- Teaching at Stanford

(through 2010, text-only, with links)

Click here for a

public version of CS 178 (Digital Photography) that includes video

recordings of the lectures.

(Or click here for a

YouTube playlist of the lectures.)

- Slides from talks

(through 2019 at least)

- Links to all of our lab's research projects

(through 2014)

- Links to our lab's technical publications

(through 2015)

- The people in our laboratory

(through 2014, except that links to faculty are up to date)

- Group photos

(including hikes, ski trips, snorkling, Death Marches, etc.)

And some photo essays

|

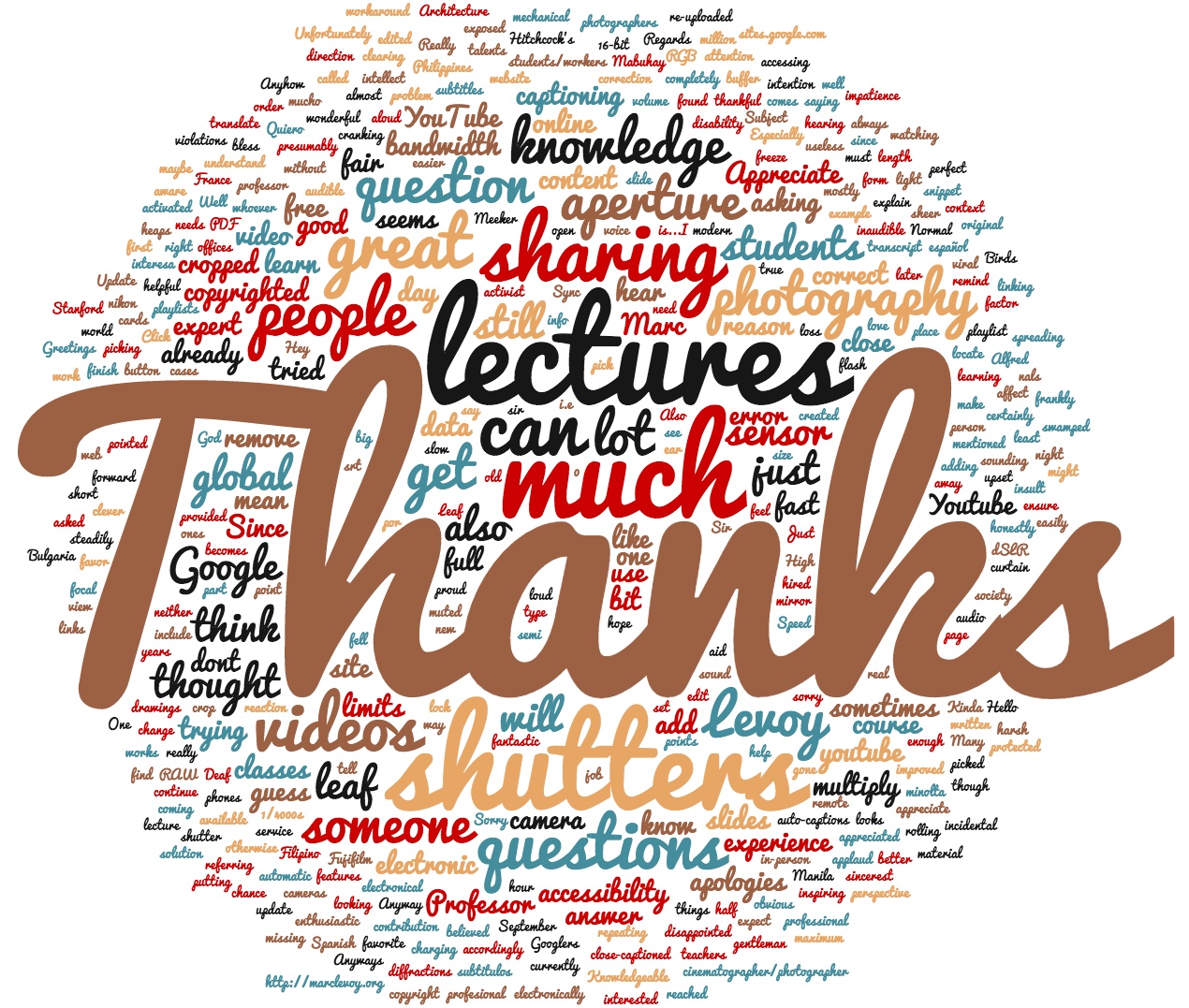

I love teaching. After becoming full-time at Google, and finding people there

who wanted to know more about photography, I decided to teach a revised but

nearly full version of my Stanford course

CS 178 (Digital Photography) at Google. These lectures were recorded and

edited to remove proprietary material, at which point Google permitted me to

make them public. Here is a link to this course, which I called Lectures on Digital

Photography. I also uploaded the lecture videos as a

YouTube playlist, where to my surprise they

went viral in September 2016. A Googler made a word cloud (at left)

algorithmically from the comments on those videos and sent it to me as a gift.

In a word cloud the size of each word is proportional to the number of times it

appears in the text being processed by the algorithm. It's one of the nicest

gifts I have ever received.

|

|

For several years

my research focused on

making cameras programmable.

One concrete outcome of this project was our Frankencamera

architecture, published in this

SIGGRAPH 2010 paper

and commercialized in Android's

Camera2/HAL3 APIs.

To help me understand the challenges of building photographic applications for

a mobile platform, I tried writing an iPhone app myself. The result was SynthCam. By capturing, tracking, aligning, and

blending a sequence of video frames, the app made the near-pinhole aperture on

an iPhone camera act like the large aperture of a single-lens-reflex (SLR)

camera. This includes the SLR's shallow depth of field and resistance to noise

in low light. The app launched

in January 2011, and seeing it appear in the App Store was a thrill.

I originally charged $0.99, but eventually made it free.

Here are a few of my favorite reviews of the app:

MIT Technology Review,

WiReD,

The Economist.

Unfortunately, with my growing involvement at Google I stopped maintaining the

app, and it stopped working with iOS 11. That said, SynthCam's ability to

SeeInTheDark inspired the development of

Night Sight, described in more detail below under My Google career.

|

|

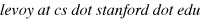

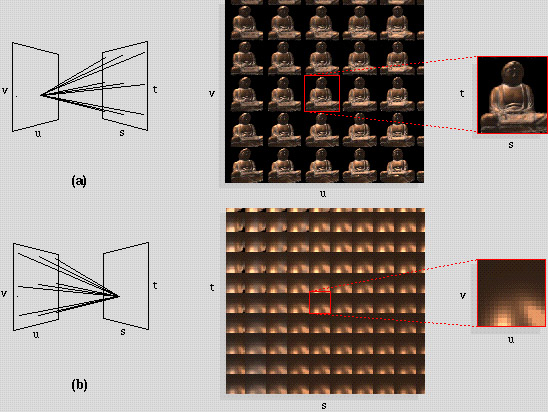

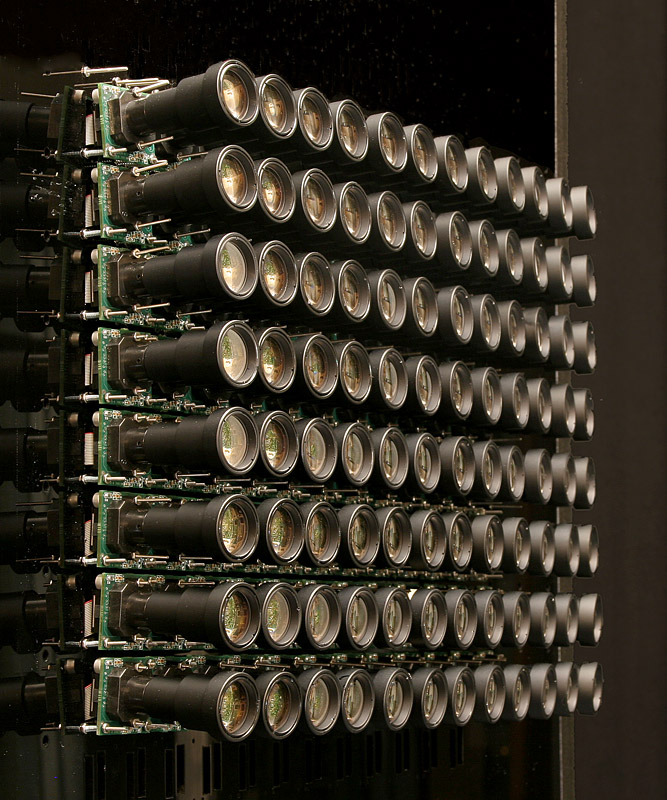

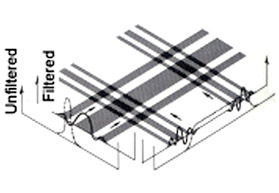

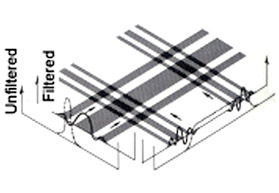

In 1999 the National Academies published

Funding a Revolution: Government Support for Computing Research. This

landmark study, sometimes called the Brooks-Sutherland report, argued that

research in computer science often takes 15 years to pay off. The

iconic illustration

from that report is reproduced at right. In 1996

Pat Hanrahan and I begin

working on

light fields and synthetic focusing, supported by the National Science

Foundation. In 2005

Ren Ng, a PhD student in our lab, worked out an optical design that allowed

dense light fields to be captured using a handheld camera. This design enabled

everyday photographs to be refocused after they are captured. I worked on this

technology alongside Ren in the Stanford Graphics Laboratory,

and later applied it to

microscopy,

but the key ideas behind the light field camera were his. (Ren's doctoral

dissertation, titled

Digital

Light Field Photography, received the 2006 ACM Doctoral Dissertation

Award.) In the same year Ren started a company called Refocus Imaging to

commercialize this technology. In 2011 that company, renamed Lytro, announced

its first camera. So 15 years from initial idea to first product. An exciting

ride, but a long wait. At left is the Lytro 1 camera, and below it the first

picture I took using the camera, refocused after capture at a near distance,

then a far distance. Unfortunately, aside from being refocusable Lytro cameras

didn't take great pictures, and refocusability wasn't a strong enough

differenting feature to drive consumer demand, so the product (and the

follow-on Lytro Illum camera) weren't successful.

Eventually,

portrait modes

on mobile cameras provided similar refocusing and

shallow depth of field, but without sacrificing

spatial resolution as microlens-based light field cameras do. While the

synthetically defocused pictures produced by mobile cameras are not

physically correct, it's hard to tell the difference.

BTW,

Ren Ng

is now a professor in EECS at UC Berkeley.

|

|

|

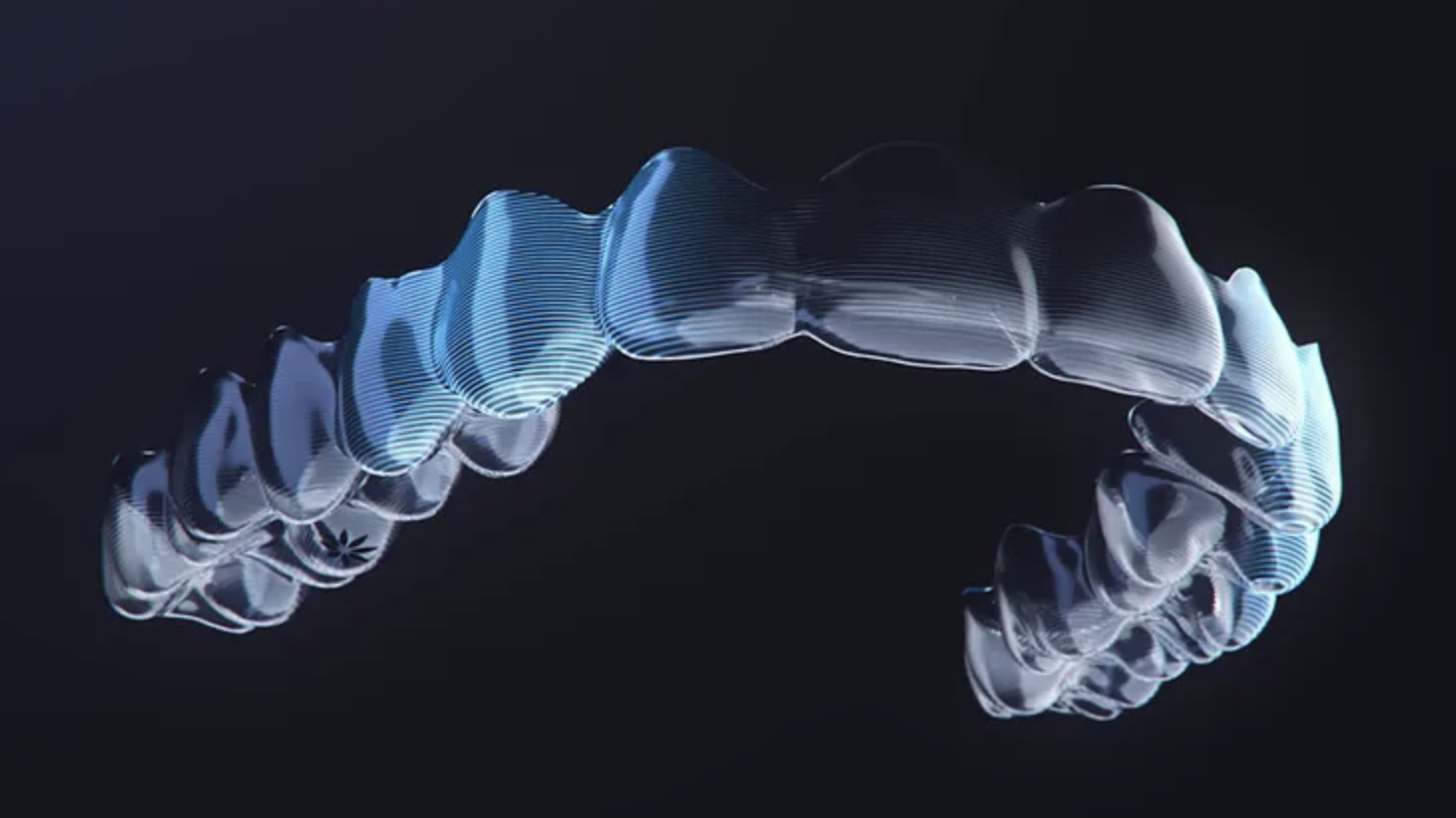

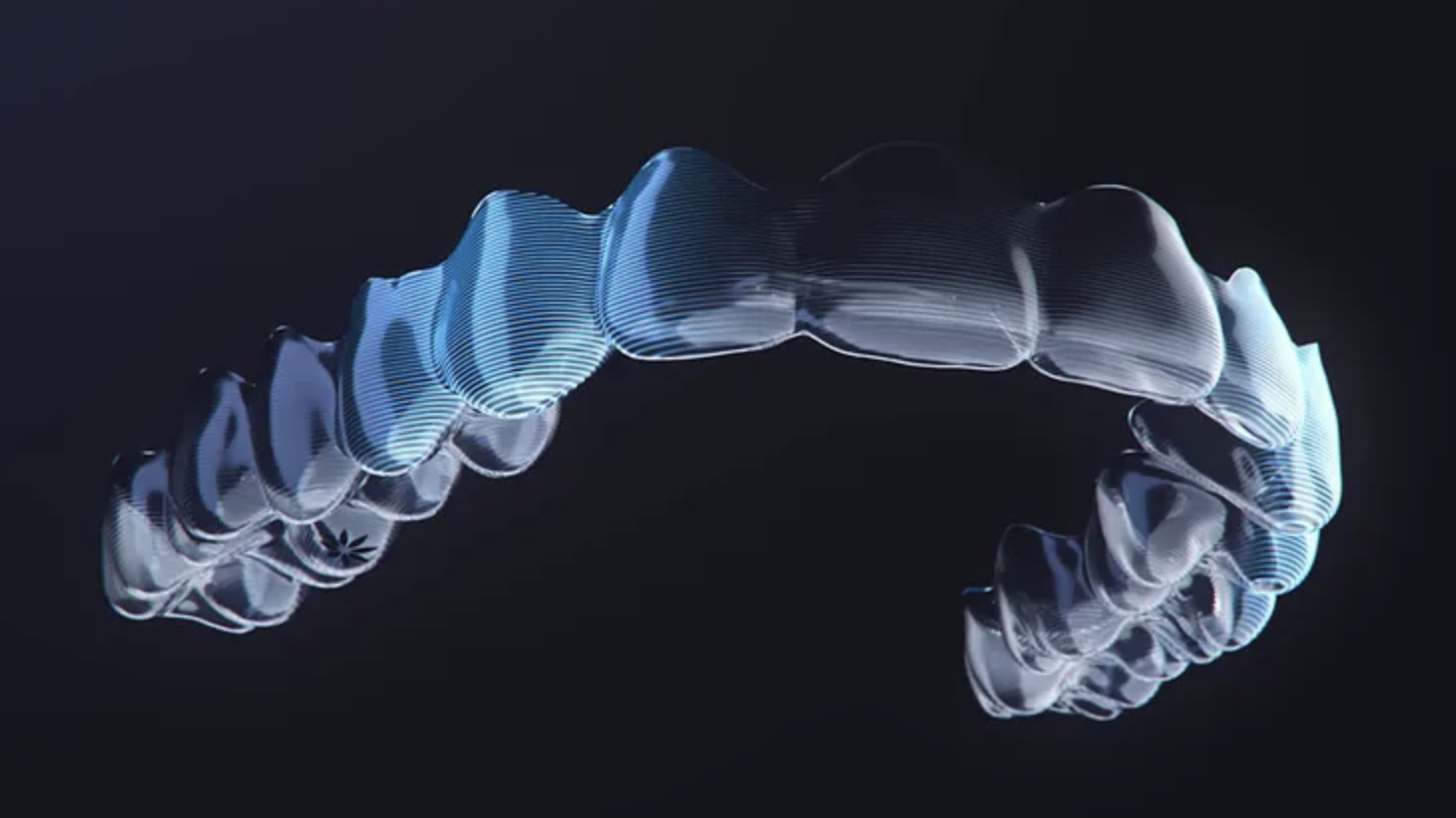

What do the two images at left have in common? In the mid-1990s, three students

in my lab (Andrew Beers, Brian Freyburger, and Apostolos Lerios) left the PhD

program to co-found Invisalign.

The company's genesis is described in this

Interesting Engineering article,

from which the upper image at left was taken. Initially they planned to use 3D

laser scanning (3rd image from left above). They later pivoted to taking a mold

of your teeth, slicing the mold finely using a milling machine, and photographing

each slice to build a 3D model. (They may have since pivoted to another

technology.) This gave me an idea - why not slice everyday objects thinly,

photograph each slice, and volume render the resulting stack of images? As an

experiment, I glued together blocks of wood (lower image at left), sliced the

block thinly using Invisalign's

milling machine,

and

photographed each slice. Here's a grayscale

video of the resulting image stack. The objects at lower right are pine

cones embedded in resin. Isn't it interesting how wood grain changes shape over

depth? Watch the knots move around in this

block of maple.

Knots are twigs from an early stage of tree growth, which eventually become

embedded in the thickening branch from which they sprung. My plan was to volume

render this and other natural objects (marble veining?), and write a book (or

create a web site) of visualizations. I would call the book

Volumegraphica (or Volumegraphia, inspired by Robert Hooke's 1665 book

Micrographia, which

contains beautiful drawings of what he could see through a microscope). An early

attempt at volume rendering that block of maple is shown at right. Click on it

for a video; you can clearly see the twig buried inside the block.

Unfortunately, I never found time to puruse this sabbatical-type project,

becoming involved instead in multi-camera arrays and light field microscopy (see

images above). I also found it challenging to fix natural objects so they don't

fall apart in the milling machine, especially if they contain cavities (like

conch shells). For more details, see this

slide deck. And for some related projects that use 3D medical imaging

technologies instead of physical slicing, see the Visible Human

Project, or this stunning

volume rendering of a mouse tail from

Resolution Sciences.

|

|

Note: I retired from Stanford in 2015. As the note in the box at the

top of this page says, I am not accepting new PhD students, postdocs, visiting

scholars, or summer interns.

Please don't email me asking about opportunities in my research group

at Stanford; there is no such group, and there hasn't been one since 2015.

Ask me instead about Adobe!

My Google career

Selected highlights

(If you don't see 7 images, open your browser window wider!)

|

|

|

|

|

|

|

Gaurav Garg taking video for CityBlock,

later productized as Google StreetView

|

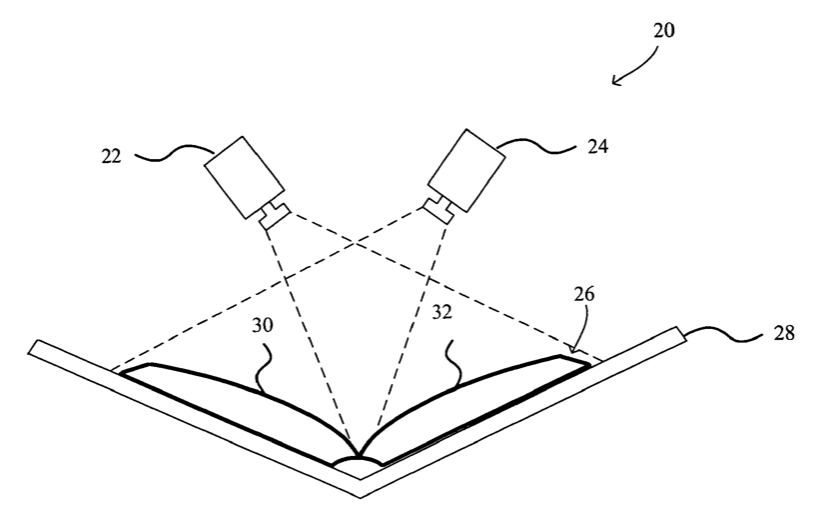

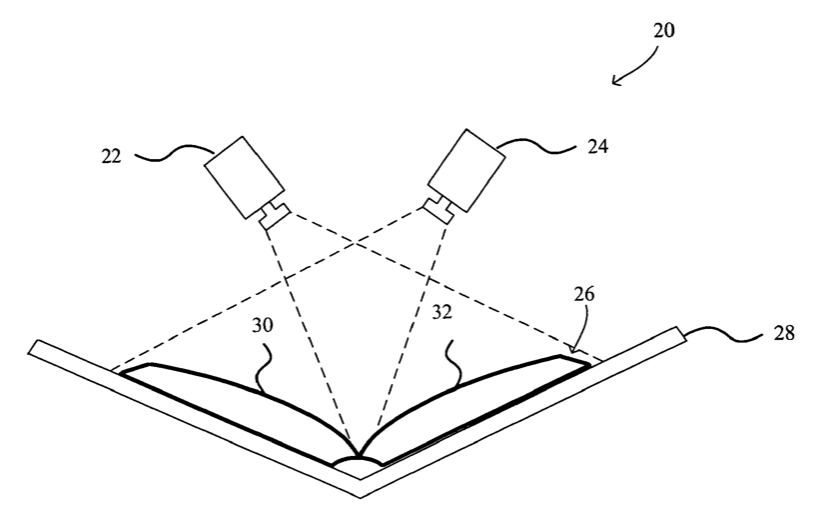

Figure 1 from US patent 7,586,655

on the design of a book scanner

|

Wearing Glass at a public photo walk,

photo by Chris Chabot (2012)

|

Portrait Mode on Pixel 2,

photo by Marc Levoy (2017)

|

Night Sight on Pixel 3,

photo by Diego Perez (2018)

|

Astrophotography on Pixel 4

Florian Kainz (2019)

|

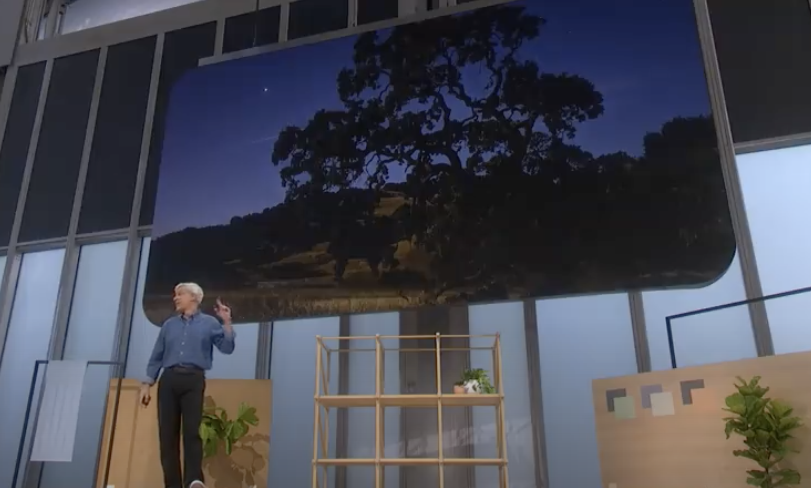

My portion of the Keynote address

at the launch of Pixel 4 (2019)

|

Chronology

In 1993, while as Assistant Professor at Stanford, I lent a few disk drives to

PhD students David Filo and Jerry Yang so they could build a table of contents

of the internet. This later became

Yahoo.

In 1996 Larry Page and Sergey Brin, also PhD students at Stanford, began

assembling a pile of disk drives two doors down from my office to build an

index of the internet. This later became

Google.

Hmmm, table of contents versus index... At the time both ideas made some sense,

but history shows which approach scaled better as the internet grew. (While

Larry shared an office with my grad students, I also got to know Sergey well,

as a student in one of my computer graphics courses.)

In 2002 I launched a Google-funded Stanford research project called

CityBlock,

which Google later commercialized as

StreetView.

For an overview of the Stanford project,

see this

talk I gave at the 2004 Stanford Computer Science Department retreat. This

article in TechCrunch

outlines the project's later history at Google, except that the video Larry

Page and Marissa Meyer captured while driving around San Francisco predated my

project at Stanford, not the other way around as the article says. (I still

have his video in my attic.)

In 2003, working as a consultant for Google, I co-designed the

book scanner for Google's

Project Ocean,

whose goal was to non-destructively digitize millions of books from six of the

world's largest libraries: the University of Michigan, Harvard (Harvard

University Library), Stanford (Green Library), Oxford (Bodleian Library), and

the New York Public Library. While the scanner design actually used in the

project is a Google trade secret, the patent linked above is representative of

the technologies we were exploring. See also

this CNET article, or this

coverage from National Public Radio.

(Francois-Marie Lefevere, the co-inventor on this second patent, was formerly

one of my students.)

From 2011 through 2020 I built and led a team at Google that worked broadly on

cameras and photography, serving first as visiting faculty in

GoogleX,

and later as a

full-time Principal Engineer and Distinguished Engineer

in

Google Research.

The internal team name was "Gcam", which became public when this

GoogleX blog article appeared.

Our first project was burst-mode photography for

high dynamic range (HDR) imaging, which we launched in

the Explorer edition of

Google Glass.

See also this public

talk.

This work was later extended

and applied to mobile photography,

launching as

HDR+ mode

in the

Google Camera app on multiple generations of

Nexus and

Pixel smartphones.

The French agency

DxO

gave the 2016 Pixel the

highest rating ever given to a smartphone camera,

and an

even higher rating to the Pixel 2 in 2017.

Here are some albums of photos I shot with the Pixel XL:

Desolation Wilderness,

Cologne and Paris,

New York City,

Fort Ross and Sonoma County. Press "i" ("Info") for per-picture captions.

In 2017 we branched out from HDR imaging to synthetic shallow

depth of field, based loosely on my 2011

SynthCam

app for iPhones.

The first version of this technology launched as

Portrait Mode on Pixel 2 (see example above).

Here is an album of portrait mode shots of

people,

and another of

small objects

like flowers.

See also this

interview in The Verge,

this cute explanatory video about the Pixel 2's camera, and these

papers in

SIGGRAPH Asia 2016 on HDR+ and

SIGGRAPH 2018 on portrait mode.

We later extended portrait mode to use

machine learning to estimate depth

(published in

ICCV 2019),

and on Pixel 4 to use both

dual-pixels and dual-cameras.

In 2018 my team developed

(or collaborated with other teams on) several additional technologies:

Super Res Zoom (paper in

SIGGRAPH 2019),

synthetic fill-flash,

learning-based white balancing

(papers in

CVPR 2015 and

CVPR 2017),

and

Night Sight (paper in

SIGGRAPH 2019).

These technologies launched on Pixel 3.

Night Sight was inspired by my prototype

SeeInTheDark app, which is described in this

public talk, but was never released as a public app.

Night Sight

has won numerous awards, including DP Review's 2018

Innovation of the Year and

the

Disruptive Device Innovation Award

at Mobile World Congress 2019.

See also these inteviews by

DP Review and

CNET, and this lay person's

video tutorial by the Washington Posts's Geoffrey Fowler.

In 2019 we launched a real-time version of HDR+ called

"Live HDR+", which made Pixel 4's viewfinder WYSIWYG (What You See Is What

You Get),

and

"Dual Exposure Controls", which allowed separate control over image

brightness and tone mapping interactively at time of capture - a first for any

camera. In Pixel 4 we also extended Night Sight to

astrophotography.

My team had been exploring this problem for a while; see

this

2017 article by Google team member Florian Kainz.

However, Florian's experiments required a custom-written camera app and manual

post-processing. On Pixel 4 consumers could capture similar pictures with a

single button press, either handheld or stabilized, such as Florian's stunning

tripod photograph (see above) of the Milky Way over Haleakala in Hawaii. Note

that pictures like this still require aligning and merging a burst of frames,

because over a 4-minute capture the stars do move. Is there art as well as

science in our work on Pixel phones? Lots of it; check out this short video by

Google on how

Italian art influenced the look of Pixel's photography.

Finally, my team also worked on underlying technologies for

Project Jump,

a

light field camera

that captures stereo panoramic videos for VR headsets such as

Google Cardboard.

See also this May 2015 presentation at

Google I/O.

A side project I did at Google in 2016 was to record

a modified version of my quarter-long Stanford course

CS 178 (Digital Photography)

in front of a live audience (of

Googlers).

The videos and PDFs of these lectures are available to the public for free

here.

See also this YouTube

playlist.

As an epilogue to this chronology, the technologies my team launched in Pixel

phones from 2014 to 2019 were quickly adopted by other smartphone vendors. It

seems fair to say that my team's work, and our publications, accelerated the use

of smartphones for everyday photography, and disrupted the camera industry. (See

also my sidebar below about cell phones versus SLRs.) I believe it was partly

for this reason that I was inducted into the

National Academy of Engineering in 2022. However, it's important to note

that these technologies were developed by scrappy subteams of smart and creative

people (led by

Sam Hasinoff and

Yael Pritch), not by me acting as some kind of "hero inventor".

Our impact was also facilitated by Google executives (starting with Dave Burke) who believed in the

team and were willing to risk their flagship product on our strange ideas.

Oh yes, and then there is the story about how Google got its name, due to a

spelling mistake made by one of my Stanford graduate students.

To hear the full story, you'll have to treat me to a glass of wine.

Will cell phones replace SLRs?

|

Although I've largely moved from SLRs to mirrorless cameras for my big-camera

needs, I'm frankly using big cameras less and less, because they have poor

dynamic range unless I use bracketing and post-processing, and they take poor

pictures at night unless I use a tripod.

Both of these use cases have become

easy and reliable on cell phones, due mainly to computational photography.

HDR+ mode on Nexus and Pixel smartphones is

one example.

These pictures were taken with the 2015-era Nexus 6P: the left

image with HDR+ mode off, and the right image with HDR+ mode on. Click on the

thumbnails to see them at full resolution. Look how much cleaner, brighter,

and sharper the HDR+ image is. Also, the stained-glass window at the end of

the nave is not over-exposed, and there is more detail in the side arches.

|

|

Taking a step back, the tech press is fond of saying that computational

photography on cell phones is making big cameras obsolete. Let's think this

through. It is true that sales of interchangeable-lens cameras (ILCs), which

include SLRs and mirrorless cameras, have

dropped by two-thirds since 2013, and

point-and-shoot cameras have largely disappeared, in lockstep with the

growth of

mobile phones.

Similarly,

Photokina, the world's largest photography trade show, shrank by half from

2015 to 2020, and was finally canceled forever in 2021, having been partly

supplanted by

Mobile World Congress.

There are confounding factors at play here, including the decimation of

professional photography niches like photojournalism, due to shifts in how news

is gathered and disseminated. But improvements in the quality of mobile

photography over the last decade is certainly a contributor to these changes.

Rishi Sanyal, Science Editor for DP Review, who has written extensively about

Pixel 3 and

Pixel 4, once estimated that

the signal-to-noise ratio (SNR) of merged-RAW files from Pixel 4 matches that

of RAW files from a micro-four-thirds camera. I think Pixel's dynamic range is

actually better - roughly matching that of a full-frame camera, due to low read

noise on Sony's recent mobile sensors, but in either case the trend is clear.

I also note that as we move from SLRs to mirrorless cameras, hence from optical

to electronic viewfinders, the dynamic range of the viewfinder becomes more

important. In this respect the Pixel 4, with its

"Live HDR+", firmly beats the electronic viewfinders on big mirrorless

cameras. (The SNR of cell phone viewfinders still lags, but I expect this to

become better in the future.) When Pixel 2 won DP Review's

Innovation of the Year in 2017,

comments in the user forums were running 60/40 in favor of "This isn't real

photography." By the time Pixel 3 won

the same award again in 2018, user forum comments were running 80/20 in

favor of "Why aren't the SLR makers doing this?" Actually, a few mirrorless

cameras do offer single-shutter-press burst capture and automatic merging of

frames. But as of 2020 these modes produce only JPEGs, not merged RAW files,

and their merging algorithms use only global homographies, not robust

tile-by-tile alignment. As a result they work poorly when the camera is

handheld or there is motion in the scene.

It's hard to resist concluding that this is a classic case of "disruptive

technology", as described by Clayton Christensen in

The Innovator's Dilemma.

Cell phones entered at the bottom of the market, so traditional camera makers

didn't see them as a threat to their business. Slowly but inexorably, mobile

photography improved, due to better sensors and optics, and to algorithmic

innovations by Google and its competitors. Computational photography played

a role, and so did machine learning.

So also did Google's culture of

publication, which allowed other companies to become "fast-followers". By

the time traditional camera companies realized that their market was in danger

it was too late. Even now (in 2020), they seem slow to respond. I analyzed

their slowness in this

2010 article,

the same year we published our

Frankencamera architecture

(commercialized by Google in its

Camera2/HAL3 API).

Little has changed since then, except that cell phones

have become more capable. Full disclosure: one factor I missed in my 2010

article was that while large cameras have powerful special-purpose image

processing engines, they have relatively weak

programmable processors. At the same time, they have more pixels

to process than cell phones, because their sensors are larger. These two

factors made innovation difficult.

In the end, it's hard to avoid thinking it's "Game Over" for ILCs, except in

niches like

sports and wildlife photography, where big glass is mandated by the laws of

physics.

Even

Annie Leibovitz thinks this.

For the rest of us, it's not only that

"the best camera is the one that's

with you"; it may also be true

that the camera that's with you

(meaning your cell phone)

is your best camera!

ChatGPT's version of this sidebar, rewritten in hip-hop style.

My Adobe career

A history yet to be written. 😉

But we're hiring! Especially MS and PhD graduates

with expertise in computational photography,

computer vision, and machine learning.

Check out these job opportunities:

https://www.linkedin.com/jobs/view/2470596140 and

https://www.linkedin.com/jobs/view/2429222366>.

See also this 1-hour, in-depth

podcast / interview

by Nilay Patel of The Verge, on

camera hardware, software, and artistic expression at Google and Adobe.

And this 10-minute talk

at Adobe MAX 2020 about

computational photography at the point of capture.

In March 2022

Adobe interviewed me

about my work in photography,

following my election to the

National Academy of Engineering.

See also this coverage in

Petapixel.

Here's a nice July 2022

interview by Atila Iamarino of Brazilian public television, about

computational photography in general, presented in a mix of English and

Portuguese.

Here is my Adobe team's gift to me of a

superhero backstory.

|

|

|

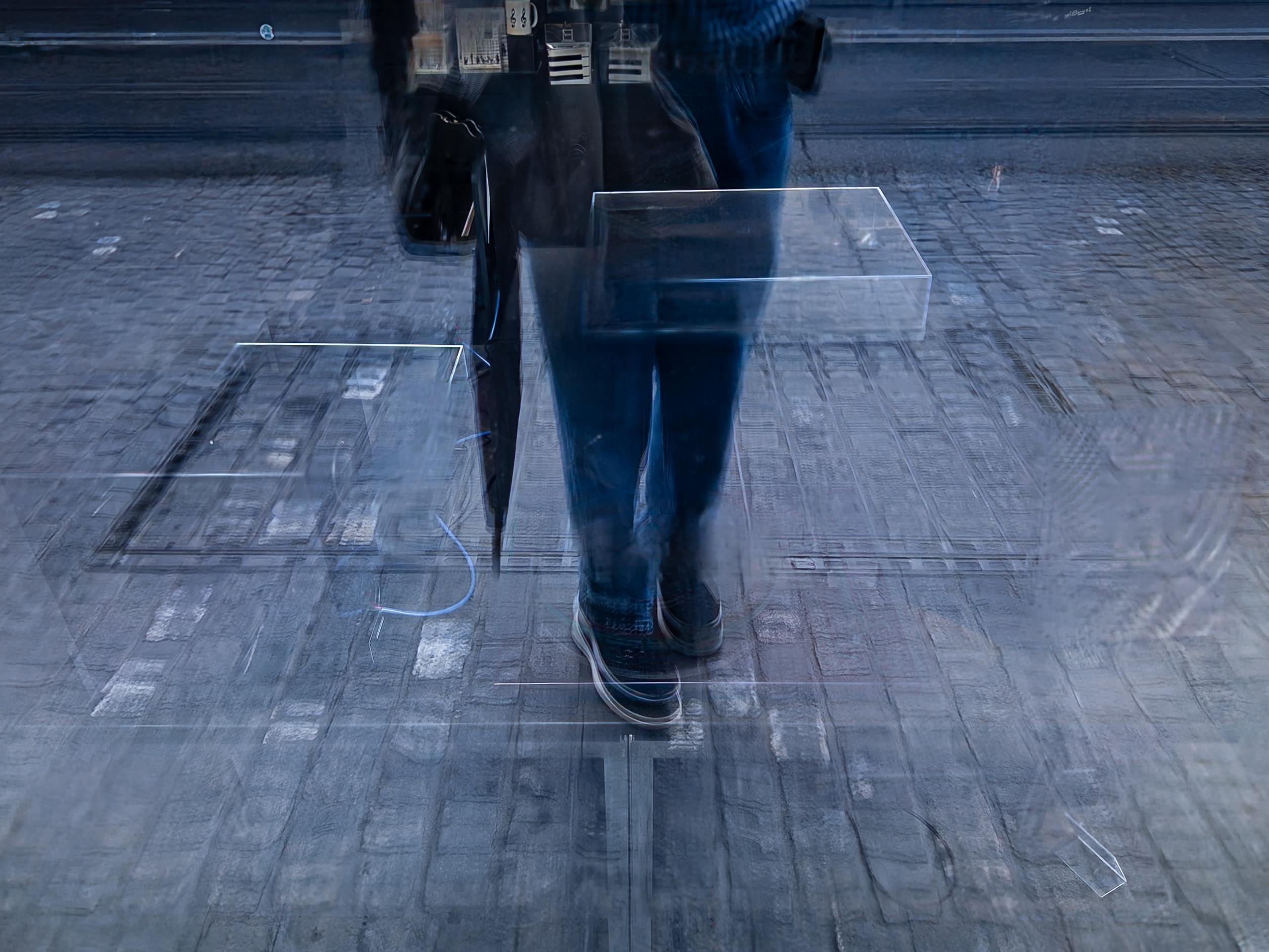

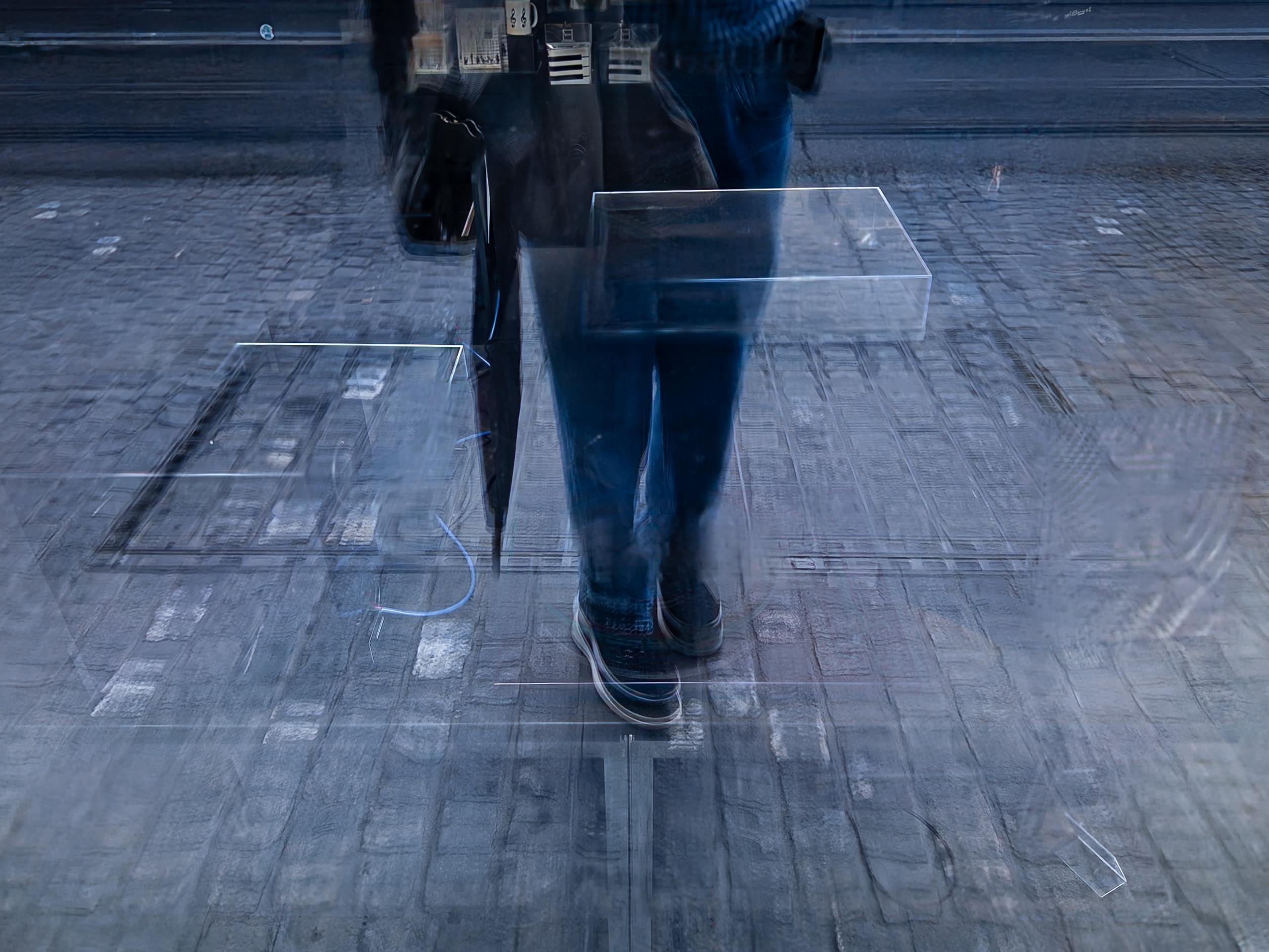

Here is an

Adobe Max 2023 Sneak Peek that my team presented on automatically removing

undesirable window reflections. The first image at left is a smartphone photo I

captured of a phonograph turntable in a Zurich storefront. Our AI produces the

second image, with the reflections (mostly) removed, and optionally the third

image, showing the reflected scene. In this image you can see me standing in

front of the store with my umbrella, because it was raining. Features in this

image appear doubled (or more) due to presence of double-pane glass. The Sneak

was called #projectseethrough. Here is a video of the

live presentation

in Los Angeles by team member Eric Kee.

Twitter/X seemed to like the presentation.

|

|

|

|

Also featured at Adobe MAX 2023 were versions of

Lightroom

and Adobe Camera Raw (ACR)

that support editing of high dynamic range (HDR) images.

Adobe collectively calls these capabilities

HDR Optimization. For more details on this technology, see this

blog by ACR architect Eric Chan. If you stopped by the Lightroom booth at

MAX, you might have seen the photograph at left that I took in the dining room of

Yosemite's Ahwanhee Hotel. The first image is standard dynamic range (SDR), and

the second is high dynamic range (HDR). To see the second one, you must be

viewing this web page in Chrome version 117 or later on a suitable HDR display,

such as a 14" or 16" Macbook Pro 2021 or later, or an Apple XDR monitor. The

third image includes some guy having his morning coffee, with help from

Photoshop Generative Fill. For more examples of SDR/HDR pairs, see this

album from a recent trip to France and

Switzerland.

|

Photography

My 5-year journey from SLRs to cell phones

|

|

|

|

|

|

|

Turkey, June 2015

|

Myanmar, December 2016

|

Antarctica, December 2017

|

Italy, Switzerland, Germany, 2018

|

Barcelona, February 2019

|

New York, March 2020

|

Other favorite Pixel 3 and Pixel 4 albums

(with a Google Photos parlour trick in the last album)

|

|

|

|

|

|

|

(Mostly) nature shots

|

New York, January 2020

|

Pixel 4 launch event

|

Prague to Vienna by bicycle

|

Eastern Sierras, August 2019

|

Portrait Blur on famous paintings

|

Selected older travel albums

|

|

|

|

|

|

|

India, December 2008

|

Thailand/Cambodia, December 2013

|

Chile and Patagonia, December 2015

|

Van trip across EU, June 2016

|

Croatia and Italy, June 2017

|

Tour of the Baltics, June 2018

|

Autobiography

|

In 2012 I was invited to give the commencement address at the

2012 Doctoral Hooding Ceremony of the University of North Carolina

(from which I graduated with a doctorate in 1989).

After the ceremony a number of people asked for the text of my address. Here

it is, retrospectively titled

"Where do disruptive ideas come from?". Or here is

UNC's version,

with more photos like the one at left. And here is the

video.

|

|

Portrait photographer

Louis Fabian Bachrach took some nice photographs (here and

here) of me in 1997

for the Computer Museum in Boston (now closed). I do occasionally wear

something besides blue dress shirts. Here are shots with other shirts, from

August 2001 and

July 2003.

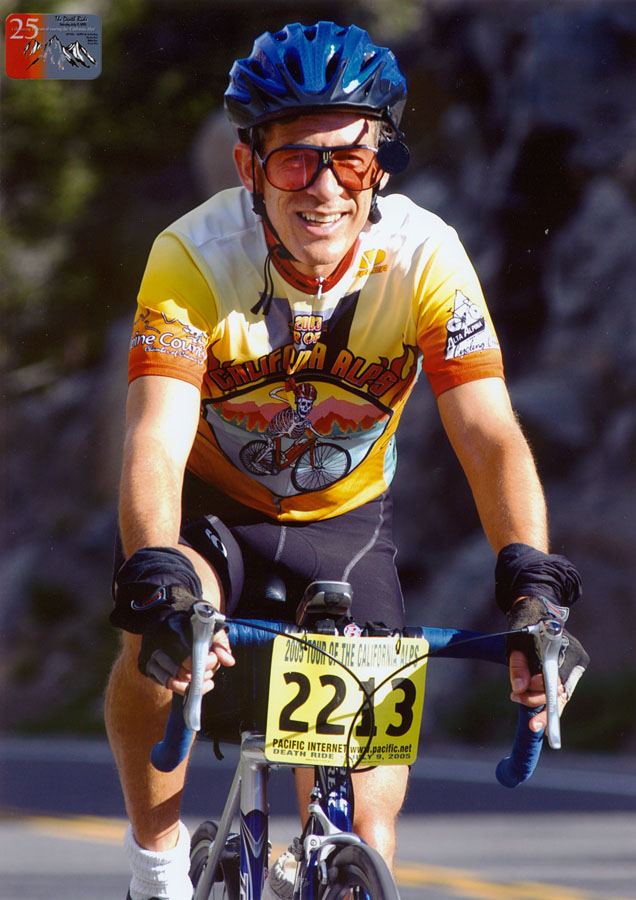

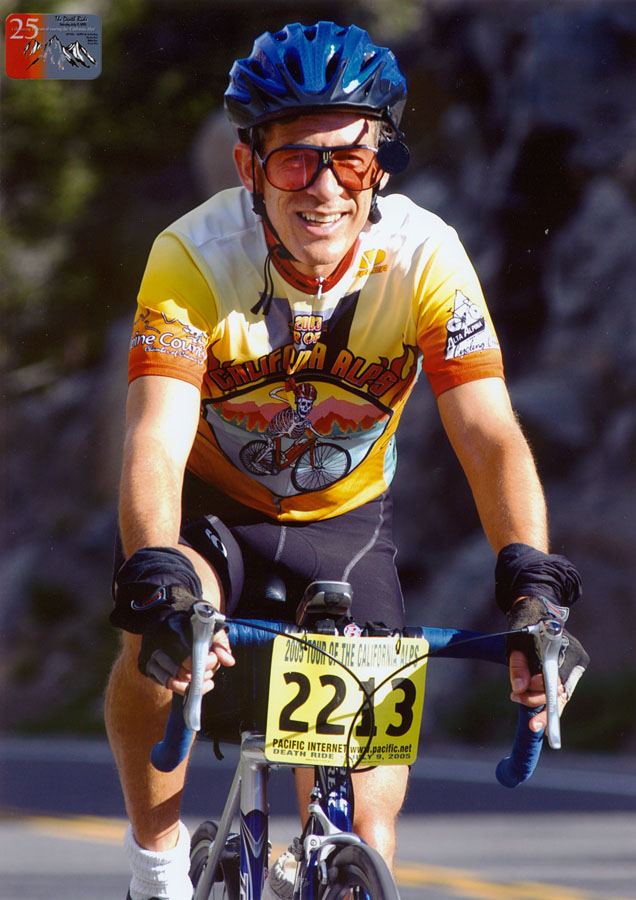

Yes, that's a Death Ride T-shirt in the

last shot. I also rode in 2005, and yes, I finished all 5 passes - 15,000 feet

of climbing. That's why I'm smiling in the official ride photograph (shown at

left), taken at the top of Carson Pass after 12 hours of cycling.

|

|

During a 1998-99 sabbatical in Italy,

my students and I digitized 10 of

Michelangelo's statues in Florence. We called this the

Digital Michelangelo Project.

Here are some

photographic essays about personal aspects of the sabbatical.

In particular, I spent the year

learning to carve in marble.

At left is my first piece - a mortar with decorated supports.

Here are some sculptures by

my mother,

who unlike me had real talent.

|

|

The Digital Michelangelo Project was not my first foray into measuring and

rendering 3D objects. Here are some drawings I made in college for the

Historic American Buildings Survey.

In this project the measuring was done by hand - using rulers, architect's

combs, and similar devices.

|

|

I still like finding and measuring old objects, especially if it involves

getting dirty. This photographic essay describes a week I spent on an

archaeological dig

in the Roman Forum. The image at left, taken during the dig,

graces the front cover of

The Bluffer's Guide to Archaeology.

|

|

My favorite radio interview, by Noah Adams of National Public Radio's All Things

Considered - about the diverging gaze directions of the two eyes in

Michelangelo's David (June 13, 2000). (Click to hear the interview using

RealAudio at

14.4Kbs or

28.8Kbs, or as a

.wav file.)

|

|

This interview, by Guy Raz, weekend host of All Things Considered, runs a close

second. It's about the

Frankencamera (October 11, 2009),

pictured at left.

(Click here for NPR's

web page containing the story and pictures,

and here for a direct link to the audio as an

.mp3 file.)

Finally, here's a text-only

interview at SIGGRAPH 2003, by Wendy Ju,

with reminiscences of my early mentors in computer graphics.

|

|

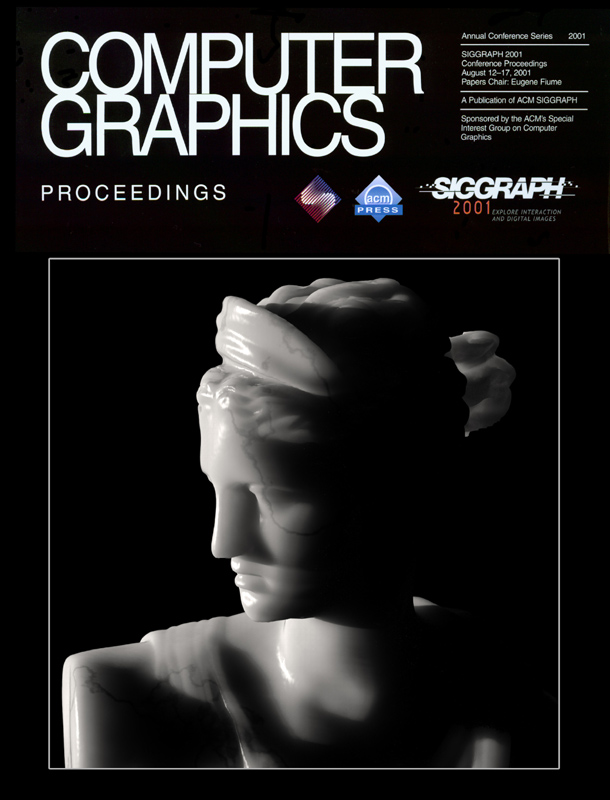

Speaking of computer graphics, I'm fond of the front cover of the

Siggraph 2001 proceedings.

The image is from a paper (in the proceedings) on

subsurface scattering,

co-authored with Henrik Wann Jensen, Steve Marschner, and Pat Hanrahan.

And check out this milk.

This paper won a Technical Academy Award in 2004.

Subsurface scattering is now ubiquitous in CG-intensive movies.

|

|

However, not everything went smoothly at Siggraph 2001. A

more-strenuous-than-expected after-Siggraph hike inspired Pat Hanrahan's and my

students to create this humorous movie poster. Click

here for the innocent version of

this story. And here for the real

story.

|

Genealogy

|

A lot of my early Stanford research related to volume data. The cause may be

genetic. My mother's cousin David Chesler is credited with the first

demonstration of filtered backprojection, the dominant method used in

computed tomography (CT) and positron emission tomography (PET) for combining

multiple projections to yield 3D medical data. Here is a

description

of his

contributions.

|

|

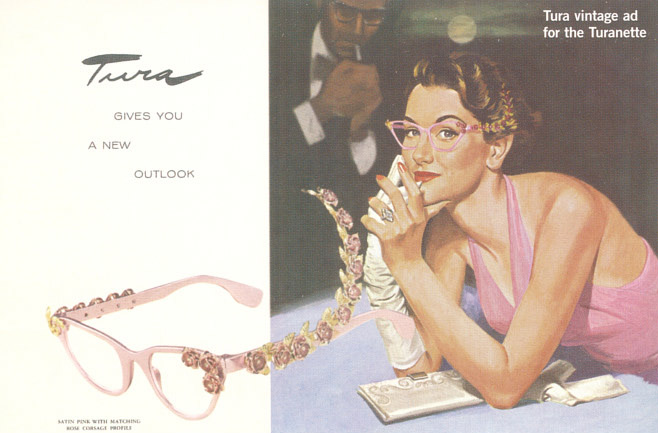

My father's genes also seem to be guiding my research tastes. Optics has been

in my family for four generations. My father Barton Monroe Levoy and my

grandfather Monroe Benjamin Levoy were opticians and sellers of eyeglasses

through their company, Tura. At left is an early

brochure.

Tura is still alive and well, with corporate headquarters in New York City,

although it is no longer in the family. Here is a marvelously illustrated

timeline

they assembled about the history of the

company; it includes the image at left.

|

|

Going back further, my great-grandfather Benjamin Monroe Levoy sold eyeglasses,

cameras, microscopes, and other optical instruments in New York City a century

ago. Here is a piece of stationery from his store. He later

moved to 42nd street, as evidenced by the address on the case of these

eyeglasses, remade as pince-nez with a retractor.

(At left is a closeup of the embossed address.)

And here is a

wooden box

he used to mail eyeglasses to customers.

The stamp is dated 1902.

|

|

In the drawing (at left)

illustrating that stationary, I believe you can see a microscope. In

any case I have an old microscope from his store. This

specimen of a silkworm mouth,

which accompanied the microscope, appears in our SIGGRAPH 2006 paper on light field

microscopy.

|

|

My great-grandfather apparently also sold binoculars from the store. This pair, made by Jena Glass about 80

years ago and inscribed with the name B.M. Levoy, New York, was recovered by

a SWAT team during a drug raid in South Florida in 2008. They have undoubtedly

passed through many hands during their long and storied life. It would be

fascinating to watch a video of everything these lenses have seen.

|

|

When my Stanford students worked with me at the optical bench, they were

perplexed by my arcane knowledge of mirror technology. Returning to my

mother's side of the family, my great-grandfather Jacob Chesler built a

factory in Brooklyn (pictured at

left) that made hardware. The building passed to my grandfather Nathan

Chesler, who converted it to making decoratively bevelled mirrors. I spent

many pleasant hours studying the factory's machinery, designed by my uncle

Bertram Chesler, for

silvering large plate-glass mirrors.

|