Abstract

Realism in computer-generated images requires accurate input models

for lighting, textures and BRDFs. One of the best ways of obtaining

high-quality data is through measurements of scene attributes from

real photographs by inverse rendering. However,

inverse rendering methods have been largely limited to

settings with highly controlled lighting. One of the

reasons for this is the lack of a coherent mathematical framework for

inverse rendering under general illumination conditions. Our main

contribution is the introduction of a signal-processing framework

which describes the reflected light field as a convolution of the

lighting and BRDF, and expresses it mathematically as a product of

spherical harmonic coefficients of the BRDF and the lighting. Inverse

rendering can then be viewed as deconvolution. We apply this theory

to a variety of problems in inverse rendering, explaining a number of

previous empirical results. We will show why certain problems are

ill-posed or numerically ill-conditioned, and why other problems are

more amenable to solution. The theory developed here also leads to

new practical representations and algorithms.

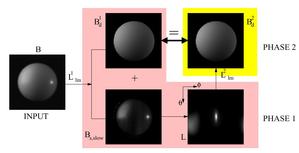

For instance, we present a method to

factor the lighting and BRDF from a small number of views,

i.e. to estimate both simultaneously when neither is known.

Background

One of the primary goals of computer graphics has been the creation of photorealistic computer-generated images and environments. This task requires accurate geometry, physically-based rendering algorithms, and accurate material and lighting models. Towards this end, the 70s and 80s saw an explosion of work in geometric modeling, while the 80s and 90s saw a great deal of work being done on efficient and physically correct methods for simulating light transport. With these problems having been fairly successfully tackled, the major challenge is the use of physically-based lighting, texture, and reflectance (BRDF) models, with the lack of accurate models for these quantities being the major limiting factor in realism. For this reason, image-based rendering, which in its simplest form, uses view interpolation to construct new images from acquired data without creating a conventional scene model, is becoming widespread. This mirrors a trend in other areas of graphics, whereby range data is being used for geometric modeling, and motion capture for scene dynamics.

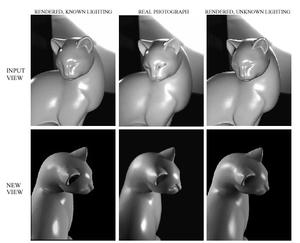

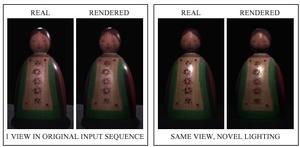

The quality of view interpolation in image-based rendering may be significantly improved if it is coupled with inverse rendering. Inverse rendering measures rendering attributes---lighting, textures, and BRDF---from photographs. Whether traditional or image-based rendering algorithms are used, rendered images use measurements from real objects, and therefore appear very similar to real scenes. Measuring scene attributes also introduces structure into the raw imagery, making it easier to manipulate the scene in intuitive ways. For example, an artist can change independently the material properties or the lighting.

However, most previous work in inverse rendering has been conducted in highly controlled lighting conditions, usually by careful active positioning of a single point source. Previous methods have also usually tried to recover only one of the unknowns---texture, BRDF or lighting. The usefulness of inverse rendering would be greatly enhanced if it could be applied under general uncontrolled lighting, and if we could simultaneously estimate more than one unknown. For instance, if we could recover both the lighting and BRDF, we could determine BRDFs under unknown illumination. One reason there has been relatively little work in these areas is the lack of a common theoretical framework for determining under what conditions inverse problems can and cannot be solved, and for making principled approximations.

Our main contribution is the development of a theoretical framework for analyzing the reflected light field from a curved convex homogeneous surface under distant illumination. We believe this framework provides a solid mathematical foundation for many areas of computer graphics. With respect to inverse rendering, we are able to obtain the following results:

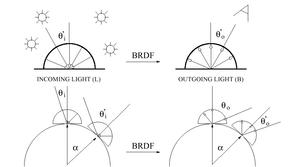

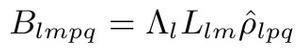

Reflection as Convolution

We are able to formalize the notion of the reflection operator as a directional convolution, deriving a simple convolution formula for the reflected light field. Thus, the integral for the reflected light can the thought of in frequency space as a simple product of spherical harmonic coefficients of the lighting and BRDF. In other words, the reflected light field can be thought of in a precise quantitative way as obtained by convolving the lighting and BRDF, i.e. by filtering the illumination using the BRDF. We believe this is a useful way of analyzing many computer graphics problems. In particular, inverse rendering can be viewed as deconvolution.

Well-posedness and Conditioning of Inverse Problems

Inverse problems can be ill-posed---there may be no solutions or several solutions. They are also often numerically ill-conditioned, i.e.\ extremely sensitive to noisy input data. From our theory, we are able to analyze the well-posedness and conditioning of a number of inverse problems, explaining many previous empirical observations. This analysis can serve as a guideline for future research. We are able to obtain analytic results for a number of specific cases, including

- General Observations: We can quantify the

precise conditions for inverse lighting and inverse BRDF to be

well-conditioned. The results agree with our intuitions. Inverse

lighting is well conditioned for BRDFs with sharp specularities, the

ideal case of which is a mirror (gazing ball), and are ill-conditioned

for diffuse BRDFs, the worst case of which is a Lambertian surface.

Inverse BRDF calculations are well conditioned when the lighting has sharp

features like edges or ideally, directional sources. They are ill-conditioned

for diffuse lighting.

- Directional Source, Mirror BRDF:

These can be described by delta

functions that translate into flat frequency spectra. Thus, inverse

lighting and inverse-BRDF computations are well conditioned. This is

a frequency-space explanation for the use of gazing or mirrored

spheres and image-based BRDF measurement from a directional source.

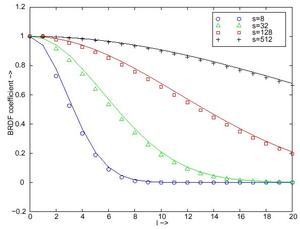

- Microfacet and Phong BRDFs: Analytic formulae

can be derived which can be approximated as gaussian functions, with

the width of the gaussian dependent on the surface roughness or the

phong exponent. One of the key results is a quantitative derivation

that estimation of the surface roughness of a microfacet BRDF (or exponent of a Phong BRDF) is

ill-conditioned under low-frequency lighting.

- Lambertian BRDF: Over 99% of the energy can be shown

to lie within the first two orders of spherical harmonic modes. This

implies among other things that the irradiance can be approximated

using only 9 parameters, i.e. as a quadratic polynomial of the

cartesian components of the surface normal, and that only the first 9

coefficients of the lighting can be recovered from a Lambertian

object.

- Factorization: We derive an analytic formula for

factoring the reflected light field into the lighting and BRDF.

Thereby, we show that if reciprocity of the BRDF is assumed, the light

field can be factored up to global scale.

New Practical Representations and Algorithms

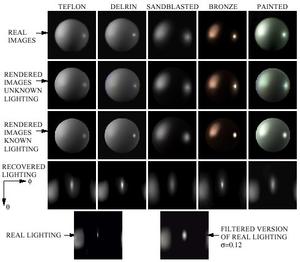

Insights from the theory lead to the derivation of a simple practical representation, which can be used to estimate BRDFs under complex lighting. The theory also leads to novel frequency space and hybrid angular and frequency space methods for inverse problems, including two new algorithms for estimating the lighting, and an algorithm for simultaneously determining the lighting and BRDF. Therefore, we can recover BRDFs under general, unknown lighting conditions.Results

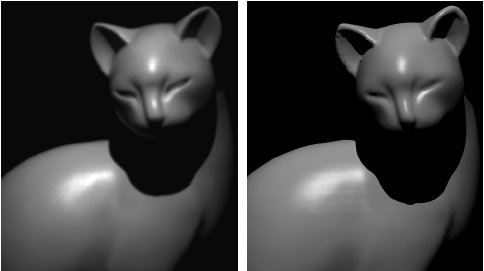

The images on the right with corresponding captions illustrate some of our results. More information is found in the paper. It should be borne in mind that for all the result figures on the right (1,7,8,9), the BRDFs were estimated under complex lighting consisting of a combination of a large area source and one or two point sources. Furthermore, we are able to estimate the BRDF (and lighting) even under complex unknown illumination. We expect the theoretical results, particularly the formalization of

reflection as a convolution, to have broad impact in a number

of areas in computer graphics and vision. This paper discusses just one of

those areas, i.e. inverse rendering. Some of the companion papers below go

into more depth in other areas such as forward rendering. The results are also

likely to be of broad interest in computer vision. One application is

recognition under variable lighting, for which Basri and Jacobs have

independently derived and used our theory for the Lambertian BRDF.

Relevant Links

Siggraph 2001 paper in Gzipped Postscript (3.7M) or PDF (1M)Related papers

- Theory in 2D. This may be simpler to read since it involves Fourier transforms rather than spherical harmonics and has most of the key insights.

- Theory for Lambertian case. A detailed analysis of the case for a Lambertian BRDF, a significant result of the theory.

- Application of above to Environment Mapping. This is a short paper which shows how the Lambertian case results can be applied to new methods of rendering diffuse objects with environment maps.

Slides for Siggraph talk PPT (1.3M)